[Updated June 2023]

DevOps may be one of the most hyped concepts in the tech industry in recent times. Yet what it actually consists of is the subject of much debate: some describe DevOps as a culture of process improvement, whilst others describe it in purely technological terms of automation and cloud technologies.

The Origins of DevOps

What few disagree on though are its origins. In the tech industry, it has long been accepted that technologists are either “devs”: those who “create”, or “ops”: those who “build and maintain”. Developers write code while engineers build the system and keep it running. Conflict frequently emerges between these two camps and their seemingly incongruent goals – whereas development teams are motivated and measured by their high change frequency and scale (deploying features, fixes, and improvements), operations teams are judged by reliability and consistency, qualities which are often seen as an outcome of low change frequency and scale (though we shall see later how this isn’t necessarily true). This often results in an antagonistic relationship between the two teams.

DevOps is or at least originated as, the effort to reconcile this fracture and improve business performance.

“…all ideas are second-hand, consciously and unconsciously drawn from a million outside sources.” Mark Twain

At a high level, the practice of DevOps focuses on culture, process, velocity, feedback loops, repeatability via automation, responsiveness to change, and continuous improvement. (Also often condensed to CALMS – Culture, Automation, Lean, Measurement, Safety). These practices have accelerated the web-scale revolution behind high-performance tech giants such as Google, Netflix, Amazon, and Facebook.

However, these concepts are not new. They have been used by industrialists, researchers, and technologists to improve the quality and efficiency of production since the dawn of the industrial revolution.

Industry and Scientific Management

In 1620 Francis Bacon codified what was to become the fundamental basis for empirical knowledge: the origin of the scientific method. Bacon’s method described the conception of a theory based upon observation, and the use of experiments to test the theory. 400 years on, we still use Bacon’s approach to create and test theories, monitor systems and check technological functionality.

“In the past, the man has been first; in the future, the system must be first.” Frederick Taylor

Frederick Taylor, in the 1880’s, applied the scientific method to management and workflows to improve labour productivity. He was one of the first people to deem work itself worthy of systematic study, using the principles that Bacon derived 200 years before. Whilst Taylor’s views on what makes a “good” worker were somewhat disturbing – he defined the “best” worker as “so stupid and so phlegmatic that he more nearly resembles in his mental make-up the ox than any other type.” – Taylorism had a huge impact on productivity across the industrialised world.

Taylor summed up his efficiency strategies in the 1911 book “The Principles of Scientific Management.” This was voted the most influential management book of the twentieth century by Fellows of the Academy of Management in 2011. Without Taylor, it’s unlikely that Apple or Google would even exist as they do now.

20th Century Production

At the beginning of the 20th Century, most manufacturing utilised inefficient techniques – cars for instance were built the way you or I would go about the task, by assembling the all the parts in one place: craft production. However when demand for cars increased, it became clear that a form of linear, or mass production was needed. One of the most well known examples of the production line is the one adopted by Henry Ford in 1913 for the Ford Model T, which was based on Taylor’s principles. Through the use of time and motion studies, Ford refined his production line until he had reduced the production time for a car from over twelve hours to just 93 minutes. He also introduced to mainstream manufacturing the concept of repeatability and standardisation. In contrast to Taylor, however, Ford always maintained his belief in the importance of the skill and craftsmanship of the worker.

“Without data, you’re just another person with an opinion.” William Edwards Deming

In the 1950s, William Edwards Deming, a statistician, physicist, and management consultant, began to apply statistical analysis to manufacturing. Deming found that prioritising quality over throughput would actually decrease costs and improve productivity. Whilst Taylorism and scientific management had boosted productivity, quality had suffered. Defects were sent down the line and built into finished products because workers were incentivised to ignore flaws in order to meet quotas.

He defined what is now known as the Deming Cycle: Plan – Do – Check – Act. This is similar to the software development lifecycle most of the technology industry use today. Deming championed continual analysis and improvement of processes – one of the key tenets of DevOps.

He saw effective quality assurance as an essential function of high-performing organisations, the key message of the third of his “Fourteen Points”; key principles of management for transforming business effectiveness:

- Constancy of purpose, with the aim to become competitive and stay in business, and to provide jobs.

- Adopt the new philosophy. Embrace change.

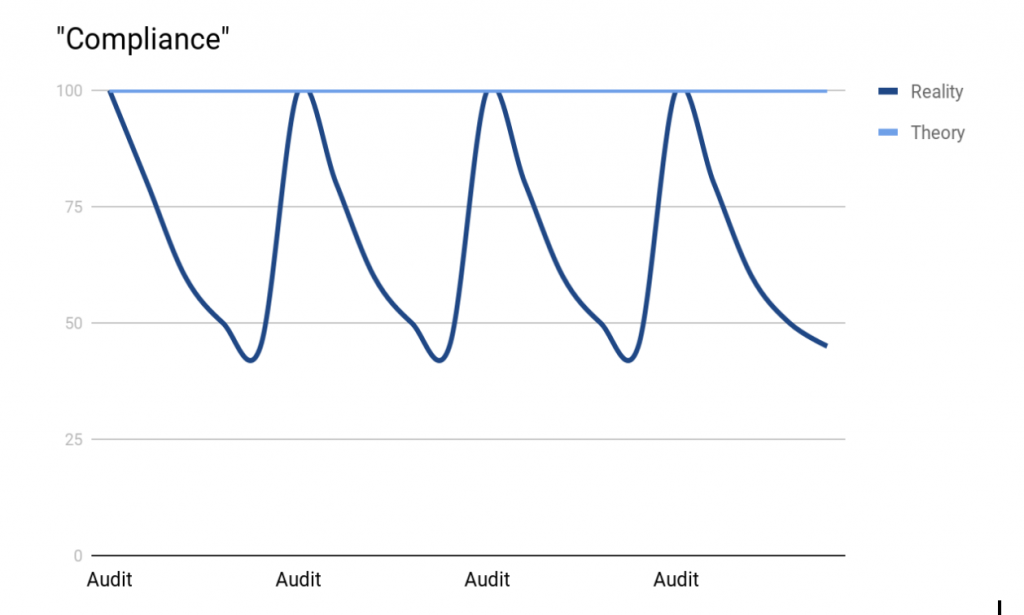

- Cease dependence on inspection to achieve quality. Build quality checks and feedback loops into the process.

- End the practice of awarding business on the basis of lowest bid. Build long term relationships with suppliers, and value loyalty and trust.

- Continuously improve processes, aim to improve quality and productivity, which in turn leads to cost reductions through less wastage and higher efficiencies.

- Institute training on the job and integrate development into employees’ roles.

- Institute leadership. Leadership should help people and machines do a better job, remove barriers to working effectively, identify improvements, and develop teams.

- Drive out fear. Fear paralyses people and teams. Transparent communication, motivation, respect and care for each other and each other’s work will contribute to this aim.

- Break down barriers between departments. Cross-functional teams can solve problems more easily and effectively than single-function teams or siloes.

- Eliminate slogans and exhortations for the workforce asking for zero defects.

- Defects (and quality) are a result of the system, not the individual.

- Eliminate targets or quotas. Substitute quantity for quality, and quantity will follow.

- Permit pride of workmanship. Eliminate management by objective or by numbers. Employees feel more satisfaction when they get a chance to execute their work well and professionally, rather than trying to meet a quota.

Institute training and self-improvement. Encourage employees to study for themselves and to see their studies and training as a self-evident part of their jobs. - The transformation is everyone’s job. Transformation happens only when everyone in the organisation works to accomplish it.

Deming’s System of Profound Knowledge is the culmination of his work and ties together his seminal theories on quality, management and leadership into four interrelated areas:

- Appreciation for a system,

- Knowledge of variation,

- Theory of knowledge

- Psychology

Each area corresponds to one or more of his fourteen points, and we can reflect on how these four areas correspond to fundamental DevOps tenets too.

Appreciation for a system means that as a leader, engineer, developer or tester, you ought to understand the system that you are looking to work within – and that thoroughly understanding that system endows you with far greater capacity to improve it. This is systems thinking, a concept which will be revisited throughout this book.

Knowledge of variation refers to two types of “cause” determined by Deming: “Common” and “Special”. Common causes are those anticipated by, or inherent to, a system. An example of this would be scaling; for example, you might know that a particular system generates logs at a rate of 500GB per day, and as a result you build functions into your system to deal with this growing demand for storage. This growth (the “cause”) is understandable and predictable, and thus you are able to implement measures to manage the variation. Deming’s second cause is “Special”, and refers to those aspects that are unknown or unpredictable, such as a change made that had unintended consequences, or a datacentre outage, or action by a malicious third party. Deming estimated that over 94% of quality issues (in his case, in manufacturing, but the same principle applies to modern software delivery) are catalysed by “common” causes, but human nature looks for the “special” cause: the one-off event, the human error, or bad actor at play. If someone accidentally shuts down a production server, Deming’s solution is not to fire the human (thereby removing the unpredictable, unknown element), but to build improvements into the system to prevent a human making that mistake again, or preventing that mistake affecting the system.

Deming’s theory of knowledge concentrates on the importance of understanding our own knowledge. How do we discern what is true from what is false? How do we identify our own innate biases, and how can we make ourselves less susceptible to confirmation bias? Deming goes back to Bacon’s scientific method with the Plan-Do-Check-Act cycle, reflecting the concept of creating a hypothesis and then testing those assumptions. People appear to learn more effectively when they make predictions. Making a prediction forces us to think ahead about the potential outcomes and also causes us to examine more deeply the system that we’re working in or on.

At around the same time, after studying consumer behaviour in supermarkets, the Toyota Motor Corporation began using Kanban (which means “signboard” in Japanese) to control and record work. Kanban boards have vertical columns with work packages in the form of cards to represent stages in a process. Each process is a “customer” of the preceding process to the left – that is, the work is “pulled” from left to right, rather than “pushed”. This concept reduces inventory pile-up, enabling a delivery system called just in time and minimising waste. It also aids the identification of bottlenecks in the process by highlighting Work In Progress (WIP). Kanban makes “work” visible. And making work visible is crucial to further improvement, because “you can’t manage what you don’t measure”.

“Any improvements made anywhere besides the bottleneck are an illusion.” Eliyahu M. Goldratt

The above constitutes Goldratt’s Theory of Constraints. In his 1984 management novel “The Goal”, Eli Goldratt built on Deming’s ideas and codified Lean Production, a precursor of DevOps methodology. He described a failing manufacturing plant where Alex, the main character, is brought in to turn things around within three months. Through a series of telephone calls and meetings with an acquaintance called Jonah (another physicist, like Deming), Alex solves the organisation’s problems by utilising pull rather than push processes, reducing WIP, and employing the Theory of Constraints. “The Goal” itself, Goldratt demonstrates, is simply to make money for the business. Anything else, if it cannot be demonstrated to help make money, is likely to be vanity.

People and Process

By the 1980s, the modern manufacturing revolution was in full swing, however, its often reductionist approach to workers wasn’t helpful, and staff turnover was high. Among those to recognise this was Burrhus Frederic Skinner, a psychologist, author, inventor and the Edgar Pierce Professor of Psychology at Harvard University. In describing the nature of quality work and happiness, he said:

“It’s the difference between a craftsman who makes a complete chair and a person on an assembly line who makes only the legs. The craftsman’s work is constantly reinforced by the process of seeing the chair take form, and finally of producing the finished chair. But the assembly-line worker sees only chair leg after chair leg — never the completed product.”

This is a near-definitive example of “systems thinking”- another key tenet of DevOps.

Being able to see the end result of the process is key to improving quality in the individual stages – how can someone build the perfect component if they don’t understand in the final product? Systems thinking is a cultural practice, rather than a process or tool, and relies on believing in the capability of team members to make small but important decisions regarding their part in the process, and thus being more invested in the outcome.

Further developments in understanding of how to develop an aspirational working culture came once again from Toyota when in 2001 they defined their philosophy, values and manufacturing ideals in four key headlines, “The Toyota Way”. These were:

- Long-Term Philosophy – Base your management decisions on a long-term philosophy, even at the expense of short-term goals.

- The Right Process Will Produce the Right Results – Focus on pull processes, managing WIP, and making work visible.

- Add Value to the Organization by Developing Your People – Provide effective training, highlight team success over individual success, and challenge your partners and suppliers.

- Continuously Solving Root Problems Drives Organizational Learning – Continuously improve (in Japanese, kaizen), use the “5 whys” to get to the root cause of problems, standardise, decide slowly and act quickly, and encourage a knowledge sharing culture.

Everything in The Toyota Way and Lean Production aligns with, and indeed comprises part of the DevOps principles.

The Agile Manifesto

Also that year, at Snowbird resort in Utah, seventeen developers, frustrated with traditional heavyweight project management methodologies, came up with the Agile Manifesto. At the time, industry experts estimated that the time between a validated business need and an actual application in production was around three years. There was a real desire to find more lightweight ways to deliver value from technology, faster. The Agile manifesto is as follows:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

The Agile Manifesto gives a clear guide to what to prioritise. For example, whilst documentation is valuable, it is more important to the business that the software works. The most well-known element of Agile is possibly the fourth line: Responding to change over following a plan. Given how quickly customer requirements, finances, and technology can change, it is often unrealistic to believe that specifications created at the start of a project will remain 100% accurate and true throughout the lifetime of the project. Thus, responding to change is one of the ways that software teams can provide a competitive edge over teams that do not.

The Agile Manifesto gives a clear guide to what to prioritise. For example, whilst documentation is valuable, it is more important to the business that the software works. The most well-known element of Agile is possibly the fourth line: Responding to change over following a plan. Given how quickly customer requirements, finances, and technology can change, it is often unrealistic to believe that specifications created at the start of a project will remain 100% accurate and true throughout the lifetime of the project. Thus, responding to change is one of the ways that software teams can provide a competitive edge over teams that do not.

Whilst Agile methodology is not fundamentally part of DevOps, the two usually go hand-in-hand. In technology teams, one is certainly easier to achieve in the presence of the other.

The First DevOps “Role”

Shortly after the Agile manifesto was written, Google was undergoing rapid expansion. As one of the few web-scale tech businesses at the time, they experienced the unprecedented challenge of trying to rapidly introduce new features whilst maintaining a highly complex, always-on, massive scale platform. The Site Reliability Engineering (SRE) team, led by Ben Traynor, was their solution.

A Site Reliability Engineer (SRE) would typically spend up to half their time performing operations-related work such as troubleshooting system issues and performing maintenance. They would spend the other half of their time on development tasks such as new features, scaling challenges, or automation. An SRE is an example of one of the first true DevOps roles in technology.

DevOps Detractors

“…the opportunities for gaining IT-based advantages are already dwindling… And as for IT-spurred industry transformations, most of the ones that are going to happen have likely already happened or are in the process of happening.” Nicholas Carr

It’s worth noting that not all in business recognised the potential of DevOps. In May 2003, Nicholas Carr published an article in the Harvard Business Review, titled “IT doesn’t matter.” In this now infamous piece, Carr defines IT as a commodity, in the same category as electricity or water. He suggests that being the first to utilise a particular technology provides only a small competitive advantage, since your competitor can purchase the same system or replicate the same technology, but you incur the lion’s share of the cost by doing it first. He stated:

“The key to success, for the vast majority of companies, is no longer to seek advantage aggressively but to manage costs and risks meticulously. If, like many executives, you’ve begun to take a more defensive posture toward IT in the last two years, spending more frugally and thinking more pragmatically, you’re already on the right course. The challenge will be to maintain that discipline when the business cycle strengthens and the chorus of hype about IT’s strategic value rises anew.”

Carr’s piece was taken very seriously at the time, and still is by many business leaders. Perhaps it is fortunate for organisations such as Salesforce and Google that they pursued technology as a competitive advantage, and disregarded Carr’s advice.

Improving IT

It is not unsurprising however that technology had such a poor reputation at the time, since research suggests that at least 80% of outages were (and potentially still are) self-inflicted. A book by Kevin Behr, Gene Kim and George Spafford, The Visible Ops Handbook (2004), described a methodology to improve operational IT. This methodology of “Visible Ops” is described in four stages:

- Stabilize Patient, Modify First Response – This first step controls risky changes and reduces MTTR (Mean Time To Resolution).

- Catch and Release, Find Fragile Artifacts – Here assets, configurations and services are inventoried in order to identify those with the lowest change success rates, highest MTTR and highest downtime costs.

- Establish Repeatable Build Library – This creates repeatable builds for critical services, to make it “cheaper to rebuild than to repair.”

- Enable Continuous Improvement – This implements metrics to enable continuous improvement of processes.

To some degree, these four stages are evolutions of elements of The Toyota Way. They formed an embryonic codification of what was to become the principles of DevOps.

Over the next few years, the technology industry underwent a paradigm shift, where methods of working were analysed, and technology became far more fundamental to the success of organisations (possibly to the chagrin of Nicholas Carr).

#DevOps

In 2008, the term DevOps was used in the industry for the first time. There’s some confusion and misinformation regarding how this came about, but I spoke to Andrew Clay Shafer and Patrick Debois, both widely credited with creating the term “DevOps”, to get the full story…

In August 2008 at the Agile Conference in Toronto, software developer Andrew Clay Shafer posted notice of a discussion group session entitled “Agile Infrastructure.” Just one person, system administrator Patrick Debois attended. Debois had become frustrated by the now ubiquitous conflicts between developers and operations while working on a data centre migration for the Belgium government and was looking for solutions. Shafer actually skipped his own session because he didn’t think anyone was interested, but Debois later tracked him down for a chat in the hallway. Inspired by that hallway discussion, they formed an “Agile Systems Administration” Google Group”

In November the following year, 2009, Patrick organised the first DevOpsDays conference in Belgium, though it was Shafer who (it’s believed) coined the term DevOps by tweeting using the #DevOps hashtag at the Velocity conference in June 2009 whilst watching the now famous “10 deploys a day” talk by John Allspaw and Paul Hammond of Flickr.

The Role of Cloud Technology

It wasn’t long after the #DevOps hashtag was first used that adoption of cloud technology accelerated rapidly. The AWS EC2 service (virtual servers on-demand) only went out of beta in late 2008. It was (and still is) a fast evolving technology. Cloud technology tends to align well with DevOps practices, because its features lend themselves to elasticity and scaling, automation, measurement and repeatability, key fundamentals of DevOps.

The tide had turned. Increasingly organisations began looking at ways of improving software deployments, moving away from large, disruptive (and frankly, stressful) deployments, towards a model of more frequent, smaller, low-risk deployments.

Jez Humble and Dave Farley wrote what is still one of the definitive texts on this approach: “Continuous Delivery” in 2010. It describes in detail how to automate your build, deployment, and testing pipeline so that you can release changes in hours or even minutes. That might not seem that impressive today, but at the time, a release cycle of months or years was very common.

Continuous delivery, according to Farley and Humble, requires:

- Comprehensive configuration management

- Continuous integration and short lived branches (in reference to Trunk-Based Development)

- Continuous testing

The automation of the build, deployment, and testing process, coupled with better collaboration between development, test, and ops teams, means that changes can be released rapidly. These smaller, low risk changes are more easily rolled back should something go wrong. “Continuous Delivery” showed how to increase velocity of change, whilst reducing risk and improving quality.

With cloud technology becoming mainstream and a desire to release software more rapidly, automation technology and tools took off. Software firms such as Puppet and Chef grew fast as developers and engineers strove to streamline their build processes and manage ever-increasing scales of infrastructure in the cloud. These tools also provided a new ability to fire up duplicate environments, such as staging, QA, test and validation, within minutes rather than weeks or months. Organisations exploiting these automation tools and using native cloud technologies felt that they were gaining significant competitive advantage by doing so, and what evidence there was, was in their favour. Even Gartner, in a 2011 report, stated that:

“By 2015, DevOps will evolve from a niche strategy employed by large cloud providers into mainstream strategy employed by 20% of Global 2000 organisations.” Gartner, March 18, 2011.

In the same report, Gartner recognised that ITIL and other “top-down” best practice frameworks had not delivered on their goals, and IT organisations were looking for something new. They understood that because DevOps was primarily a cultural shift, driven from the ground up, it could prove far easier for technology departments to adopt than ITIL or similar frameworks

The Codification of DevOps

Two years later, Gene Kim, Kevin Behr and George Spafford wrote The Phoenix Project, a novel about a failing organisation struggling to meet the demands of modern technological complexity and competition. This novel inspired technology leaders and engineers alike, because it described with eerie familiarity what it was like to work in a technology organisation with poor change control, problematic “Ops vs Devs” cultures and inadequate visibility and monitoring of work or performance.

The Phoenix Project was inspired by The Goal by Eli Goldratt. It demonstrated a number of actionable ways to improve the performance of your IT organisation, such as effective (but lean) change control, effective (and again, lean, testing), reducing WIP and unplanned work, and avoiding letting anyone become the bottleneck for processes. The “bottleneck person” in the book is Brent, a character who knows everything but hasn’t documented anything. A key message of the book? Don’t be Brent.

The Phoenix Project was inspired by The Goal by Eli Goldratt. It demonstrated a number of actionable ways to improve the performance of your IT organisation, such as effective (but lean) change control, effective (and again, lean, testing), reducing WIP and unplanned work, and avoiding letting anyone become the bottleneck for processes. The “bottleneck person” in the book is Brent, a character who knows everything but hasn’t documented anything. A key message of the book? Don’t be Brent.

Gene also introduces in the Phoenix Project one of the first efforts to codify DevOps, using “The Three Ways”:

- Flow (or Systems Thinking)

- Feedback Loops

- Continuous Improvement

These “Three Ways” are concepts that echo the Toyota Way, Deming’s “Plan-Do-Check-Act” cycle, and other best practices, made specific to the DevOps context. Gene’s subsequent book, written with Jez Humble (of “Continuous Delivery”), Patrick Debois and John Willis in 2016, “The DevOps Handbook”, goes deeper into the technical application of The Three Ways. It explores how to measure what matters to the business, and how to implement technical processes such as Continuous Integration and Continuous Delivery.

it didn’t take long to realise that there was another functional silo with a somewhat different set of interests than Dev or Ops: Security. Security, the IT profession realised, should be built into code as it is developed rather than added later on by a different team. Predictably, the idea became known as DevSecOps.

Gene also introduces the concept of “DevSecOps” – the integration of DevOps practices into the application of information security. If The Phoenix Project was the “why” to do DevOps, The DevOps Handbook provides the “how”.

Measuring DevOps

Given that DevOps is at least partly about effective measurement and continuous improvement, it’s self-evident that we, as an industry, should measure the success of DevOps itself. In 2012, Puppet began surveying people working in technology to understand the adoption and development of DevOps practices. They published “State of DevOps” reports which focussed on twenty key capabilities.These fall along familiar categories:

- Technical (version control, test automation, deployment automation, trunk-based development)

- Process (WIP limits, visual management, visualisation of the value stream)

- Cultural (team culture, learning cultures, and job satisfaction).

Now taken over by DORA (DevOps Research and Assessment), an organisation created by Nicole Forsgren, Gene Kim, Jez Humble and Soo Choi, the State of Devops Report is being improved every year. According to Alanna Brown at Puppet, they “have built the deepest and most widely referenced body of DevOps research available, drawing on the experience of more than 30,000 technical professionals around the world.” The data from these reports demonstrates that Carr’s view of IT as a cost centre was misguided. It is clear that IT is a powerful driver of value to an organisation where velocity, security and stability are essential for success.

“…software delivery is an exercise in continuous improvement, and our research shows that year over year the best keep getting better, and those who fail to improve fall further and further behind.” Nicole Forsgren

On the back of the last four years of State of DevOps reports, Nicole Forsgren wrote the illuminating book “Accelerate”. It explains which metrics correlate to organisational performance, and what you should measure in order to find out where and how to improve.

Forsgen states that the key metrics separating high from low performers in tech organisations are:

- Deployment frequency (and pain!)

- Lead time for change (from code commit to code deploy)

- Mean Time To Restore (MTTR)

- Change failure rate

Interestingly, the first two of these metrics are throughput (traditionally development-oriented) measures; the last two are stability (traditionally operations-oriented) measures.

The State of DevOps in 2019

As of the 2018 State of Devops report, the findings consistently show that:

- Software delivery and availability unlock competitive advantages.

- How you implement cloud infrastructure matters.

- Use of open source software improves performance.

- Outsourcing by function is rarely adopted by elite performers and hurts performance.

- Key technical practices drive high performance. (i.e monitoring, automated testing, security integration)

- Industry doesn’t matter when it comes to achieving high performance for software delivery.

The statistics show that the high performers exhibit 46 times more frequent code deployments than low performers. They have a 7 times lower change failure rate, over 2,500 times faster lead time from code commit to deployment, and are over 2,600 times faster to recover from incidents.

When an organisation can deploy quickly, recover rapidly, and suffer few outages, it has the ability to reach the market before competitors and respond to customer demand quickly. It will also provide more stable and secure service. This results, ultimately, in Goldratt’s “Goal”, making more money for the business.

Such a state is not reached by simply automating, using cloud technology, or recruiting a “DevOps Engineer” – it is the culmination of great team culture, continuous improvement, feedback loops, systems thinking, and a rigorous approach to using the right technology. DevOps is not a framework (like ITIL), an industry standard, a suite of tools, or a job title.

DevOps encompasses the culture, technologies, tools, skills and processes that enable organisations to go from idea to production as rapidly as possible, incurring low risk and cost, and providing high security and reliability at scale.

The definition of DevOps itself is continually evolving and improving, and while I may offer a definition as above, it will be out of date within days of writing, because, like the technology and services we build, it is continuously in flux, and being improved by the same people practising it.

Where does DevOps go next? I believe that the scope of DevOps needs to widen. As mentioned above, a large reason why DevOps is so successful is that it’s a ground-up movement, created and progressed by the actual people doing it (unlike ITIL, for example). However, this has meant that DevOps, naturally, focusses tightly on the technological functions of an organisation.

The next phase of DevOps includes practices and approaches such as “Platform as a Product” and also broadens the scope of DevOps to the wider organisation, evolving into “digital transformation” using Andrew Clay Shafer’s 5 Elements, Jabe Bloom’s Three Economies, and the broader, cross-sectoral concepts of resilience engineering in sociotechnical systems.

2023 Update: Safety Cultures and Platform Engineering

Over the past two to three years, DevOps has seen further maturity and adaptation to new norms, driven by unprecedented global circumstances and evolving technological trends. A particularly noteworthy shift has been the focus on building robust ‘Safety Cultures’. This approach emphasizes the creation of an environment where experimentation is encouraged, failures are seen as opportunities for learning, and psychological safety is paramount. Teams are given the latitude to innovate while knowing that missteps are not only tolerated but expected as part of the process of continuous improvement. This aspect has greatly enhanced the resilience of DevOps, fostering a more transparent, responsive, and adaptive culture.

Platform Engineering has also been a rising trend, presenting a shift in how organizations perceive their development infrastructure. Rather than treating platforms as a collection of tools and services, they are viewed as integrated products that evolve with the needs of the end-users, who are the developers. This perspective empowers developers, reduces overhead, and ultimately accelerates the delivery of value to the business.

The COVID-19 pandemic brought its own set of challenges and lessons. The necessity of remote working underlined the importance of strong communication channels, reliable cloud-based tooling, and the autonomy of distributed teams. It revealed the strength of DevOps practices in enabling organizations to maintain their pace of innovation even in the face of major disruptions. Companies that had already embraced DevOps were better positioned to navigate the transition to a remote work environment, demonstrating the value of adaptability inherent in the DevOps philosophy.

As we look to the future, the trajectory of DevOps and related methodologies appears more integrated and comprehensive. The focus will likely continue to expand beyond the technological realm, permeating deeper into business strategies and driving broader digital transformation initiatives. The trends of Safety Cultures and Platform Engineering are expected to solidify, with even greater emphasis on psychological safety, learning from failures, and treating internal platforms as products.

Furthermore, the remote working lessons from the pandemic will likely catalyze a shift towards more distributed, asynchronous ways of working. We may see a rise in ‘RemoteOps’, an evolution of DevOps practices adapted for a world where remote and flexible work arrangements become the norm. In this era, principles of effective remote communication, time-zone friendly practices, and trust-based management will become critical. In essence, the future of DevOps is about expanding its boundaries, integrating more closely with business goals, and continually evolving to meet the demands of our ever-changing world.