Most of my websites are built using WordPress on Linux in AWS, using EC2 for compute, S3 for storage and Aurora for the data layer. Take a look at sono.life as an example.

For this site, I wanted to build something that aligned with, and demonstrated some of the key tenets of cloud technology – that is: scalability, resiliency, availability, and security, and was designed for the cloud, not simply in the cloud.

I chose technologies that were cloud native, were as fast as possible, easily managed, version controlled, quickly deployed, and presented over TLS. I opted for Hugo, a super-fast static website generator that is managed from the command line. It’s used by organisations such as Let’s Encrypt to build super fast, secure, reliable and scalable websites. The rest of my choices are listed below. Wherever possible, I’ve used the native AWS solution.

- Framework: Hugo

- Source control: Git/Github

- DNS: AWS Route53

- Deployment: AWS cli – boto/python

- Scripting: NPM

- Continuous Integration: Circle CI

- SSL: AWS Certificate Manager

- Storage: AWS S3

- Presentation layer: AWS CloudFront distribution

- Analytics and tracking: Google Analytics

- Commenting: Disqus

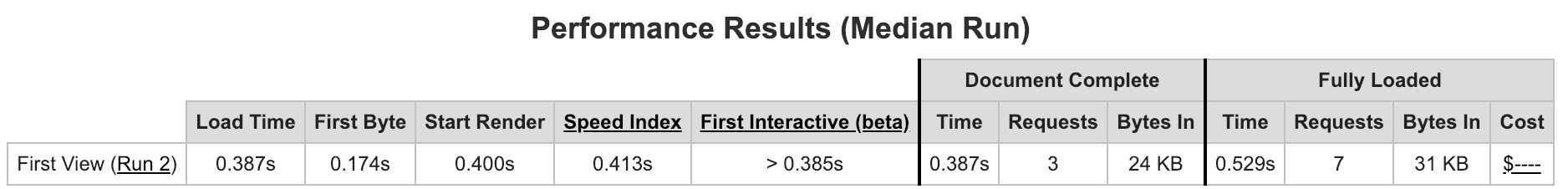

The whole site loads in less than half a second, and there are still improvements to be made. It may not be pretty, but it’s fast. Below is a walk through and notes that should help you build your own Hugo site in AWS. The notes assume that you know your way around the command line, that you have an AWS account and have a basic understanding of the services involved in the build. I think I’ve covered all the steps, but if you try to follow this and spot a missing step, let me know.

Notes on Build – Test – Deploy:

Hugo was installed via HomeBrew to build the site. If you haven’t installed Homebrew yet, just do it. Fetch by running:

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

brew install hugo

One of the things I love about Hugo is the ability to make rapid, on-the-fly changes to the site and see the result instantly, running the Hugo server locally.

hugo server -w -D

The option -D includes drafts in the output, whilst -w watches the filesystem for changes, so you don’t need to rebuild with every small change, or even refresh in the browser.

To create content, simply run

hugo new $postname.md

Then create and edit your content, QA with the local Hugo server, and build the site when you’re happy:

hugo -v

V for verbose, obvs.

You’ll need to install the AWS CLI, if you haven’t already.

brew install awscli

Check it worked:

aws --version

Then set it up with your AWS IAM credentials:

aws configure AWS Access Key ID [None]: <your access key> AWS Secret Access Key [None]: <your secret key> Default region name [None]: <your region name> Default output format [None]: ENTER

You don’t need to use R53 for DNS, but it doesn’t cost much and it will make your life a lot easier. Plus you can use funky features like routing policies and target health evaluation (though not when using Cloudfront distributions as a target).

Create your record set in R53. You’ll change the target to a Cloudfront distribution later on. Create the below json file with your config.

{

"Comment": "CREATE/DELETE/UPSERT a record ",

"Changes": [{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "a.example.com",

"Type": "A",

"TTL": 300,

"ResourceRecords": [{ "Value": "4.4.4.4"}]

}}]

}

aws route53 change-resource-record-sets --hosted-zone-id ZXXXXXXXXXX --change-batch file://sample.json

Create a bucket. Your bucket name needs to match the hostname of your site, unless you want to get really hacky.

aws s3 mb s3://my.website.com --region eu-west-1

If you’re using Cloudfront, you’ll need to specify permissions to allow the Cloudfront service to pull from S3. Or, if you’re straight up hosting from S3, ensure you allow the correct permissions. There are many variations on how to do this – the AWS recommended way would be to set up an Origin Access Identity, but that won’t work if you’re using Hugo and need to use a custom origin for Cloudfront (see below). If you don’t particularly mind if visitors can access S3 assets if they try to, your S3 policy can be as below:

{

"Version":"2012-10-17",

"Statement":[{

"Sid":"PublicReadGetObject",

"Effect":"Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":["arn:aws:s3:::example-bucket/*"

]

}

]

}

Request your SSL certificate at this time too:

aws acm request-certificate --domain-name $YOUR_DOMAIN --subject-alternative-names "www.$YOUR_DOMAIN"

ACM will automatically renew your cert for you when it expires, so you can sleep easy at night without worrying about SSL certs expiring. That stuff you did last summer at bandcamp will still keep you awake though.

Note: Regards Custom SSL client support, make sure to select ONLY SNI. Supporting old steam driven browsers on WinXP will cost you $600, and I don’t think you want that.

The only way to use https with S3 is to stick a Cloudfront distribution in front of it, and by doing this you get the added bonus of a super fast CDN with over 150 edge locations worldwide.

Create your Cloudfront distribution with a json config file, or straight through the cli.

aws cloudfront create-distribution --distribution-config file://distconfig.json

Check out the AWS documentation for details on how to create your config file.

Apply your certificate to the CF distribution too, in order to serve traffic over https. You can choose to allow port 80 or redirect all requests to 443. Choose “custom” certificate to select your cert, otherwise Cloudfront will use the Amazon default one, and visitors will see a certificate mismatch when browsing to the site.

When configuring my Cloudfront distribution, I hit a few issues. First of all, it’s not possible to use the standard AWS S3 origin. You must use a custom origin (specifying the region of the S3 bucket as below in order for pretty URLs and CSS references in Hugo to work properly. I.e.

cv.tomgeraghty.co.uk.s3-website-eu-west-1.amazonaws.com

instead of

cv.tomgeraghty.co.uk.s3.amazonaws.com

Also, make sure to specify the default root object in the CF distribution as index.html.

Now that your CF distribution is ready, anything in your S3 bucket will be cached to the CF CDN. Once the status of your distribution is “deployed”, it’s ready to go. It might take a little while at first setup, but don’t worry. Go and make a cup of tea.

Now, point your R53 record at either your S3 bucket or your Cloudfront disti. You can do this via the cli, but doing it via the console means you can check to see if your target appears in the list of alias targets. Simply select “A – IPv4 address” as the target type, and choose your alias target (CF or S3) in the drop down menu.

Stick an index.html file in the root of your bucket, and carry out an end-to-end test by browsing to your site.

Build – Test – Deploy

Now you have a functioning Hugo site running locally, S3, R53, TLS, and Cloudfront, you’re ready to stick it all up on the internet.

Git push if you’re using Git, and deploy the public content via whichever method you choose. In my case, to the S3 bucket created earlier:

aws s3 cp public s3://$bucketname --recursive

The recursive switch ensures the subfolders and content will be copied too.

Crucially, because I’m hosting via Cloudfront, a new deploy means the old Cloudfront content will be out of date until it expires, so alongside every deploy, an invalidation is required to trigger a new fetch from the S3 origin:

aws cloudfront create-invalidation --distribution-id $cloudfrontID --paths /\*

It’s not the cleanest way of doing it, but it’s surprisingly quick to refresh the CDN cache so it’s ok for now.

Time to choose a theme and modify the hugo config file. This is how you define how your Hugo site works.

I used the “Hermit” theme:

git clone https://github.com/Track3/hermit.git themes/hermit

But you could choose any theme you like from https://themes.gohugo.io/

Modify the important elements of the config.toml file:

baseURL = "$https://your-website-url" languageCode = "en-us" defaultContentLanguage = "en" title = "$your-site-title" theme = "$your-theme" googleAnalytics = "$your-GA-UA-code" disqusShortname = "$yourdiscussshortname"

Get used to running a deploy:

hugo -v

aws s3 cp public s3://your-site-name --recursive

aws cloudfront create-invalidation --distribution-id XXXXXXXXXX --paths /\*

Or, to save time, set up npm to handle your build and deploy. Install node and NPM if you haven’t already (I’m assuming you’re going to use Homebrew again.

$ brew install node

Then check node and npm are installed by checking the version:

npm -v

and

node -v

All good? Carry on then:

npm init

Create some handy scripts:

{

"name": "hugobuild",

"config": {

"LASTVERSION": "0.1"

},

"version": "1.0.0",

"description": "hugo build and deploy",

"dependencies": {

"dotenv": "^6.2.0"

},

"devDependencies": {},

"scripts": {

"testvariable": "echo $npm_package_config_LASTVERSION",

"test": "echo 'I like you Clarence. Always have. Always will.'",

"server": "hugo server -w -D -v",

"build": "hugo -v",

"deploy": "aws s3 cp public s3:// --recursive && aws cloudfront create-invalidation --distribution-id --paths '/*'"

},

"author": "Tom Geraghty",

"license": "ISC"

}

Then, running:

npm run server

will launch a local server running at http://localhost:1313

Then:

npm run build

will build your site ready for deployment.

And:

npm deploy

will upload content to S3 and tell Cloudfront to invalidate old content and fetch the new stuff.

Now you can start adding content, and making stuff. Or, if you’re like me and prefer to fiddle, you can begin to implement Circle CI and other tools.

Notes: some things you might not find in other Hugo documentation:

When configuring the SSL cert – just wait, be patient for it to load. Reload the page a few times even. This gets me every time. The AWS Certificate manager service can be very slow to update.

Take a look at custom behaviours in your CF distribution for error pages so they’re cached for less time. You don’t want 404’s being displayed for content that’s actually present.

Finally, some things I’m still working on:

Cloudfront fetches content from S3 over port 80, not 443, so this wouldn’t be suitable for secure applications because it’s not end-to-end encrypted. I’m trying to think of a way around this.

I’m implementing Circle CI, just for kicks really.

Finally, invalidations. As above, if you don’t invalidate your CF disti after deployment, old content will be served until the cache expires. But invalidations are inefficient and ultimately cost (slightly) more. The solution is to implement versioned object names, though I’m yet to find a solution for this that doesn’t destroy other Hugo functionality. If you know of a clean way of doing it, please tell me 🙂